Defining and Teaching AI Literacy in Schools

Exploring Definitions, Soft Power, and the Importance of AI Literacy for Teachers

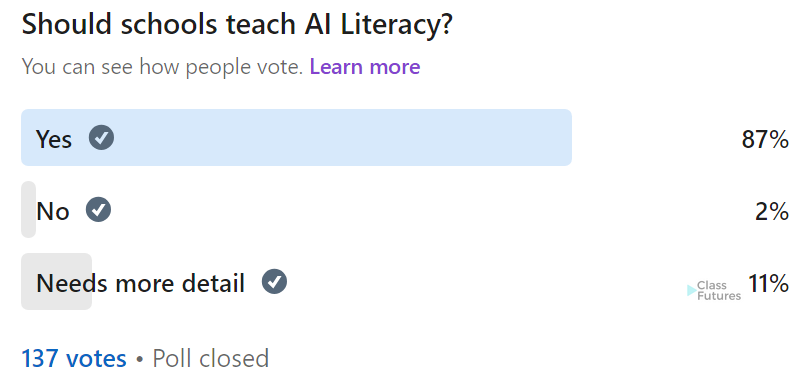

The relevance of AI literacy within a school context is growing rapidly. In a recent LinkedIn poll I conducted, 87% of respondents agreed that schools should teach AI literacy, 2% disagreed, and 11% felt more detail was needed. The comments were equally enlightening, with suggestions that AI literacy should be compulsory and questions about what arguments could be made against teaching it.

Defining AI literacy is crucial because teaching it effectively is essential for gaining the most value and understanding from it. If it is not properly defined and taught, it could have social consequences: “In the near future, AI literacy or a lack of it and what facets of AI knowledge have been taught may turn out to be a critical social issue” (Rizvi, Wait, and Sentence, 2023). However, defining AI literacy remains the first challenge. As Philip Colligan, CEO of the Raspberry Pi Foundation, noted in a blog post: "The first problem is defining what AI literacy actually means" (October 2023).

So, what is AI literacy?

In this newsletter to subscribers, I analyse definitions from wider reading, their history, and the influence of soft power, and explore how we can arrive at a more comprehensive definition of the term AI literacy.

Charles Logan, a doctoral candidate in Learning Sciences at Northwestern University, recently released a new paper, "Learning About and Against Generative AI Through Mapping Generative AI's Ecologies and Developing a Luddite Praxis" (2024). In it, he provides examples of definitions for AI literacy. Tracing the emergence of the term, Logan states it was first used by Konishi (2015) in her work on the Japanese economy: "AI literacy is concerned with identifying new situations for applying AI, focusing on monetising often very personal artifacts." Examples might include recording a recipe as digitised text for a cooking robot. Another definition is from Kandelhofer et al. (2016): "AI literacy allows people to understand the techniques and concepts behind AI products and services instead of just learning how to use certain technologies or current applications."

As Logan (2024) points out, these definitions are driven by "speculative economic trends." He highlights the limitations of this focus as "troubling," exemplified by Amazon’s $10 billion loss on Alexa. Moreover, it seems insufficient to focus solely on the economic aspects without considering the social, environmental, and political factors.

Beyond this, even the term 'literacy' is, according to Logan (2024), a "contentious term laden with power." It should be accessible to everyone. He further cites Magerko (2020), who defines AI literacy as: "a set of competencies that enables individuals to critically evaluate AI technologies; communicate and collaborate effectively with AI; and use AI as a tool online, at home, and in the workplace."

In my view, there is scope to discuss and define the term in a school and teaching context, and having this conversation aids the process. There are many further questions such as the curriculum, how it is delivered, gender parity, diversity, equipment, and access (Rizvi, Wait, and Sentence, 2023). If we don’t engage in this and define and design AI literacy, technology companies will. In fact, they already are.

Take this example from Logan (2024): "Common Sense Education publishes an AI literacy curriculum for grades 6-12; we must consider how its parent company Common Sense Media's partnership with OpenAI (Common Sense Media, 2024) may be shaping how GenAI is framed in the lesson plans. An AI literacy that fails to examine how BigTech influences students, educators, and administrators allows corporations to exert a form of soft power through embedding preferred versions of digital literacy that lack analyses of power" (Pangrazio & Sefton-Green, 2023).

The 'soft power' exerted by technology companies is something digital leaders and educators should not be naive about. We need to be educated to recognise this influence and be clear about what we mean by AI literacy. This awareness will help us teach AI literacy responsibly and ethically, ensuring a comprehensive understanding of its meaning, history, geography, and ethical implications. To achieve this, we must also prioritise our own learning as educators. As Colligan puts it: “If we’re serious about AI literacy for young people, we have to get serious about AI literacy for teachers.”

There’s a lot to play for, and I am both worried and excited.

References

Logan, C. (2024). Learning About and Against Generative AI Through Mapping Generative AI's Ecologies and Developing a Luddite Praxis.

Konishi, Y. (2015). AI literacy in the context of the Japanese economy.

Kandelhofer, M., et al. (2016). Understanding AI products and services.

Magerko, B. (2020). AI literacy competencies.

Pangrazio, L., & Sefton-Green, J. (2023). Digital literacy and power analysis.

Rizvi, S., Waite, J., & Sentence, S. (2023). Artificial Intelligence teaching and learning in K-12 from 2019 to 2022: A systematic literature review.

Common Sense Media (2024). AI literacy curriculum partnership with OpenAI.